OpenAI turns to age prediction to make ChatGPT safer for teens

A new feature uses behavioural signals to estimate whether an account belongs to someone under 18, automatically tightening content rules while raising fresh questions about accuracy, privacy and parental control.

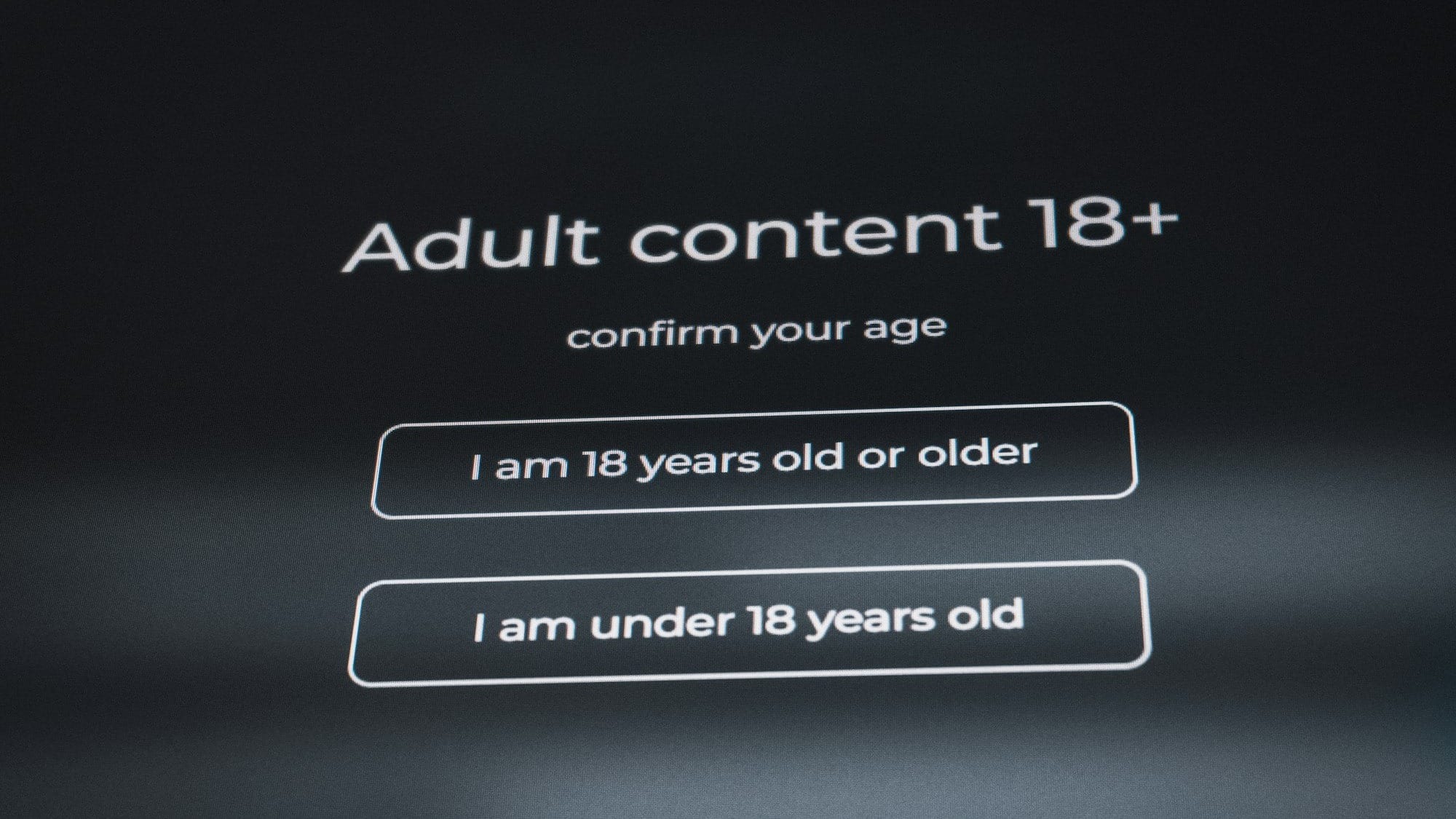

OpenAI is rolling out an age prediction feature across consumer ChatGPT plans, aiming to identify accounts that are likely to belong to users under 18 and apply additional safety protections by default.

In a statement, the company said the system relies on a combination of behavioural and account-level signals rather than a single data point. These include how long an account has existed, typical times of day when it is active, patterns of use over time and a user’s stated age. Taken together, these signals are used to estimate whether an account is more likely to be operated by a minor.

For a lay reader, this is closer to pattern recognition than age verification. The system does not “know” a user’s age in the way a passport does. Instead, it looks for usage habits that tend to correlate with younger users, such as late-evening activity after school hours or rapid shifts in interests, and weighs them alongside explicit information the user has provided.

When the model estimates that an account may belong to someone under 18, ChatGPT automatically switches that account into a more restricted experience. OpenAI said this is designed to reduce exposure to content that may be inappropriate or harmful to minors, particularly in cases where confidence in a user’s age is low or information is incomplete.

The additional protections cover a wide range of material. These include graphic violence or gory content; viral challenges that could encourage risky or harmful behaviour; sexual, romantic or violent role play; depictions of self-harm; and content that promotes extreme beauty standards, unhealthy dieting or body shaming. In effect, the system errs on the side of caution, defaulting to a safer setting unless it has high confidence that the user is an adult.

OpenAI acknowledged that automated estimates will not always be correct. Users who are mistakenly placed into the under-18 experience can confirm their age and restore full access. This is done by submitting a selfie through Persona, a third-party identity verification provider. The company said the process can be initiated through Settings > Account.

The rollout also expands the role of parental controls. According to OpenAI, parents can customise a teen’s ChatGPT experience by setting “quiet hours” during which the service cannot be used, controlling features such as memory or whether conversations are used to help train models, and receiving notifications if the system detects signs of acute distress. For families, this positions ChatGPT less as a standalone app and more as a managed digital environment.

Age prediction sits in an uncomfortable space between safety and surveillance. Platforms have long struggled to enforce age-appropriate experiences without asking users to hand over sensitive documents. Behaviour-based estimation avoids mandatory ID checks, but it introduces uncertainty and raises questions about transparency and bias. Patterns that look “teen-like” in aggregate may also describe some adult users, particularly those with irregular schedules or niche interests.

OpenAI said it is learning from the initial rollout and will continue to refine the model’s accuracy. The company also said the feature will be introduced in the European Union in the coming weeks, a region where digital services face stricter requirements around child protection and data handling.

The move reflects growing pressure on technology companies to demonstrate proactive safeguards for younger users. Regulators in multiple jurisdictions have signalled that age-appropriate design is no longer optional, particularly for services powered by generative AI that can produce realistic but potentially harmful content on demand.

Related reading

- ServiceNow embeds OpenAI models to bring agentic AI into enterprise workflows

- Automation Anywhere unveils agentic solutions with OpenAI

- From pilots to practice: How AI startups are crossing into frontline healthcare

By combining probabilistic age estimation with parental controls and optional verification, OpenAI is attempting a middle path. It avoids blanket identity checks while still intervening when there is a reasonable chance a user is under 18. Whether that balance satisfies parents, regulators and privacy advocates will depend less on the idea than on how well it works in practice.

As with many AI safety features, the challenge lies in the edge cases. A system that is too strict risks frustrating legitimate users; one that is too permissive undermines its stated purpose. OpenAI’s decision to default to a safer experience when in doubt suggests where it is placing that trade-off, at least for now.

The Recap

- OpenAI adds age prediction to ChatGPT consumer plans.

- Model uses account and behavioral signals to estimate age.

- Age prediction to roll out in the EU in coming weeks.