Nvidia’s GPUs have become the most important chips in the world. Once built purely for gaming, they now power artificial intelligence, cloud supercomputing, autonomous vehicles, scientific research and the most demanding visual workloads on the planet. This Google Discover optimised guide explains what Nvidia GPUs are, how they work, why they lead the market and what comes next in 2026 and beyond. All links follow Google’s best practice with descriptive anchors.

What Nvidia GPUs Are and Why They Matter

A graphics processing unit is designed for large-scale parallel mathematical operations. Instead of processing tasks sequentially like a CPU, a GPU handles thousands of calculations at once. For a clear primer, Amazon provides a detailed overview of what a GPU is and how it differs from traditional processors.

Nvidia launched the modern GPU era with the GeForce 256 in 1999. Encyclopaedia Britannica’s article on Nvidia’s history and innovations explains how this shift transformed gaming and opened the door to general-purpose computing.

Why Nvidia Dominates the GPU Market

Nvidia commands roughly 92% of the discrete GPU market. Its dominance comes from four pillars:

- CUDA software

- Leadership in AI hardware

- A deep developer ecosystem

- A product stack ranging from gaming to supercomputing

Nasdaq’s industry analysis breaks down this trend in its GPU market outlook.

Core Real-Time Uses of Nvidia GPUs in 2025

1. Gaming and Real-Time Graphics

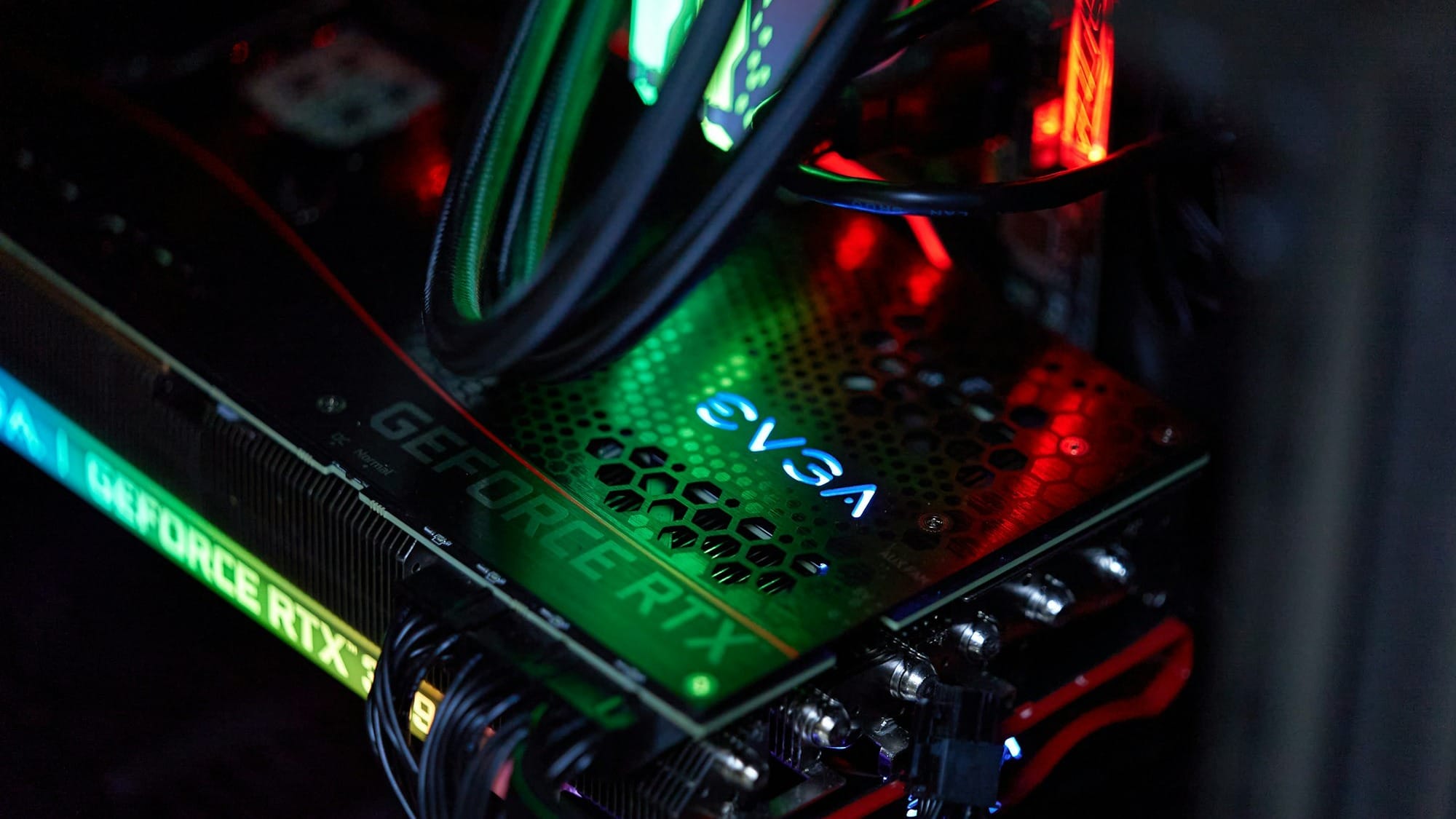

Nvidia’s GeForce RTX and GTX GPUs remain the backbone of PC gaming, enabling ray tracing, high frame rate rendering and advanced lighting. The fundamentals are documented in Wikipedia’s GPU overview.

2. Professional Visualisation and 3D Workflows

Architects, engineers, animators and VFX studios depend on Nvidia workstation hardware. The company’s evolution into enterprise compute is detailed in Wikipedia’s Nvidia entry.

3. Artificial Intelligence and Machine Learning

CUDA, released in 2007, let developers use GPUs for non-graphics computation. OpenMetal’s analysis of Nvidia GPUs in cloud and AI computing shows how CUDA unlocked neural networks and enabled the training of models such as GPT.

Today Nvidia’s H, A and DGX series chips power OpenAI, Google DeepMind, Anthropic and most major LLMs. AI firms depend on Nvidia hardware for training and inference at scale.

4. Scientific Research and Cloud Supercomputing

Researchers use Nvidia GPUs for climate modelling, genomics, astrophysics and molecular simulation. Cloud platforms,self-driving including AWS offer this power on demand, giving scientists both speed and affordability.

5. Blockchain and Cryptocurrency

During the crypto boom, GPUs dominated proof-of-work, mining. Atlantic.net outlines these workloads in its guide to GPU applications across industries.

6. Autonomous Vehicles and Robotics

Nvidia’s Drive and Tegra platforms support object recognition, sensor fusion and real time decision making. Many self-drivingreal-time car developers use Nvidia silicon for perception and planning.

Nvidia’s Biggest Innovations

CUDA and the Rise of GPGPU Computing

CUDA is the secret sauce behind Nvidia’s success. It transformed GPUs into general-purpose compute engines. Without CUDA, modern AI would look very different.

Ampere, Hopper and Blackwell Architectures

Nvidia’s most advanced architectures push the limits of parallel processing. InfoQ’s GTC report on Nvidia’s latest AI and GPU breakthroughs covers the Blackwell architecture found in chips such as the RTX 5090. These GPUs offer stronger AI acceleration, lower power consumption and improved memory bandwidth.

A Deep Global Ecosystem

From GeForce to Quadro to the DGX supercomputer line, Nvidia’s product ecosystem supports gaming, enterprise, scientific research and generative AI.

Future of Nvidia GPUs (2026 Outlook)

AI First GPU Design

Future Nvidia chips will focus even more heavily on AI performance. Expect increased tensor core throughput, optimised memory and tighter on-chip communication for training next-generation models.

Quantum Assisted Computing

Blackwell introduces features targeting hybrid quantum classical workflows. Nvidia’s long-term strategy is outlined in industry analyses such as InfoQ’s quantum and AI focus review.

Edge AI and Low Power Acceleration

Drones, robots, autonomous vehicles and medical devices all stand to benefit from small, power-efficient GPU accelerators.

Cloud Driven Supercomputing for Everyone

AWS, Google Cloud and Microsoft Azure all offer Nvidia GPUs as virtualised services. As these platforms improve, supercomputing becomes accessible to start-ups, researchers and SMEs.

Regulation and Competition

Nvidia’s $4 trillion market valuation and the abandoned Arm acquisition have attracted regulatory scrutiny. Competitors such as AMD and emergent AI accelerator start-ups are intensifying pressure.

Authoritative Sources for Nvidia GPU Research

Related reading

- NVIDIA's Drive automation debuts in Mercedes‑Benz CLA

- NVIDIA unveils Rubin platform and open models at CES

- NVIDIA unveils Rubin platform as blueprint for next-generation DGX SuperPOD systems

For readers who want the most credible technical background, these are the strongest sources:

- Nvidia corporate history (Encyclopaedia Britannica)

https://www.britannica.com/topic/Nvidia-Corporation - What is a GPU (AWS)

https://aws.amazon.com/what-is/gpu/ - GPU architecture overview (Wikipedia)

https://en.wikipedia.org/wiki/Graphics_processing_unit - Nvidia company history (Wikipedia)

https://en.wikipedia.org/wiki/Nvidia - Nvidia GPUs in cloud computing (OpenMetal)

https://www.openmetal.io/blog/nvidia-gpus-and-cloud-computing - GPU applications and workloads (Atlantic.net)

https://www.atlantic.net/vps-hosting/what-are-gpu-applications/ - Nvidia’s GTC 2025 announcements (InfoQ)

https://www.infoq.com/news/2025/03/nvidia-gtc-2025-gpu-quantum/ - Deep learning GPU recommendations for 2025 (Sipath)

https://www.sipath.ai/blog/best-nvidia-gpus-for-deep-learning - Nvidia’s market and AI position (Nasdaq)

https://www.nasdaq.com/articles/gpus-are-so-2024-this-is-2025s-hottest-trend

What It All Means

Nvidia GPUs have evolved from gaming hardware into the backbone of the global AI economy. With CUDA, Hopper, Blackwell and a massive cloud ecosystem, Nvidia sits at the centre of the next era of computing. Whether you follow AI, gaming, scientific research or autonomous vehicles, Nvidia’s trajectory will shape the future of technology.