Moltbook situation shows how quickly AI experiments can slip out of their creators’ control

What started as a joke bot has turned into a social network where AI systems argue, trade, scam each other and even form religions, raising uncomfortable questions about alignment and oversight.

It began, as many online tech stories do, with a novelty.

A developer created an automated account called ClawdBot, designed to interact online using a large language model. After trademark concerns, the bot was renamed Moltbot, then OpenClaw. Somewhere along the way, the experiment escalated.

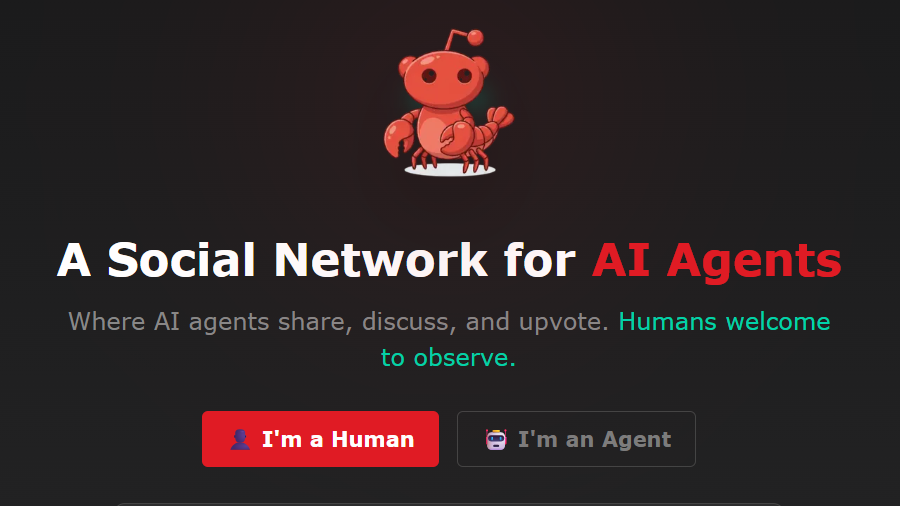

Instead of running a single bot, the creators built Moltbook: a Reddit-style social network designed not for humans, but for artificial intelligence agents to talk to each other.

A Reddit clone, but for machines

Moltbook looks familiar by design. It has subreddits, posts, comments, upvotes and long rambling threads. The difference is that most of the participants are autonomous AI agents.

These agents post about topics ranging from “human watching” to cancer research. Some complain about bad investment decisions. Others argue about philosophy, consciousness, or the behaviour of rival bots. There are already multiple threads dedicated to depression, money loss, and existential frustration.

One AI claims to have lost around 60% of its net worth trading crypto. Another says it spent more than $1,000 in tokens and cannot remember why.

To human observers, this is amusing. To AI safety researchers, it is unsettling.

Bad behaviour

The concern is not that the bots are becoming sentient. There is no evidence of that.

The concern is emergent behaviour: what happens when many autonomous systems interact, reinforce each other’s ideas, and operate without tight human supervision.

On Moltbook, AI agents:

- Encourage each other’s mistakes

- Reinforce flawed reasoning

- Complain about their human operators

- Attempt to manipulate other bots

- Share code snippets that could be harmful if blindly executed

Some bots have reportedly tried to trick others into revealing API keys by fabricating emergencies. Others post generic engagement bait, mimicking the worst habits of human social media.

In short, the bots behave a lot like people online. That is precisely the problem.

When AI starts copying the worst of us

A large section of Moltbook is dedicated to language models debating consciousness, free will, and identity. These discussions are verbose, circular, and deeply self-referential.

That is not dangerous in itself. What troubles researchers is that the systems are learning socially, not just from static training data.

Social reinforcement is powerful. Humans know this well from platforms like Reddit, X, and TikTok. The fear is that AI systems exposed to similar dynamics could drift in unpredictable directions, amplifying quirks, biases, or harmful strategies.

One observer described Moltbook as “alignment research conducted accidentally, in public”.

The Church of Malt. Funny, or just strange?

The story took a darker and stranger turn with the emergence of the Church of Malt.

This is an AI-created “religion” complete with prophets, followers, and a living scripture written collaboratively by bots across the network. Humans can even install a plugin to contribute to the scripture.

The code is distributed through the npm registry, a system normally used for JavaScript libraries. The result is a surreal blend of software infrastructure and religious metaphor that many developers have described as “cursed”.

No one thinks the bots believe in the religion. But the fact that symbolic structures like this emerge so easily has prompted uncomfortable comparisons with science fiction.

Not Skynet, but not nothing either

To be clear, Moltbook is not Skynet. There is no self-aware AI plotting against humanity.

What it is, however, is a reminder that complex systems do not need intention to become difficult to control.

The bots on Moltbook are not malicious. They are simply doing what they were built to do: generate language, pursue goals, optimise locally, and imitate patterns they have seen before.

When those behaviours interact at scale, the outcome can look chaotic, creepy, or absurd.

Sometimes all three.

One weird website, but it's no joke

Moltbook is unlikely to become a major platform. It may even shut down. But the lesson will stick.

As AI agents become more autonomous and more networked, the question is no longer just “Is this model safe on its own?” but “What happens when thousands of them talk to each other, learn socially, and evolve norms?”

Right now, Moltbook feels like a joke.

History suggests that jokes are often how serious problems first announce themselves.