Model collapse and synthetic data: what happens when the internet fills with generated content

For the companies training the next generation of models, it raises a deeper problem: if tomorrow’s training data is increasingly made of yesterday’s model outputs, are we still learning from the world, or from a hall of mirrors?

The most immediate effect of an internet awash with AI-generated text is not a dramatic moment where machines “break”. It is the slow erosion of information quality. Search results become more repetitive. Niche expertise is harder to find. Pages look authoritative but are built from recycled fragments. For readers, that means more time spent cross-checking and less confidence that the first answer is the right one. For the companies training the next generation of models, it raises a deeper problem: if tomorrow’s training data is increasingly made of yesterday’s model outputs, are we still learning from the world, or from a hall of mirrors?

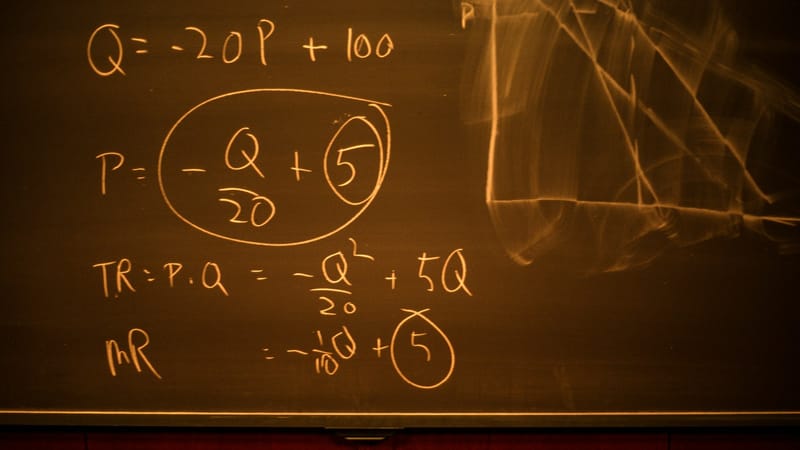

This concern has a name in the research literature: model collapse. The idea is straightforward. Generative models learn patterns from data. If a new model is trained on a growing share of synthetic material, and especially if that synthetic material replaces human-originated data, the model can drift. Rare details disappear first. Errors can become normalised. Outputs become more homogenous, more generic, and less grounded in the messy variety of human language and experience. In the worst case, a system becomes increasingly confident while becoming less faithful to the world it is meant to describe.

The theory matters because it connects two trends that usually get discussed separately. One is the flood of generated content across the open web, in marketing, support pages, low-effort news rewrites, spam, and social media. The other is the reliance of modern AI development on web-scale corpora. If the input stream changes, the models change, and not necessarily for the better.

What “model collapse” does and does not mean

It is important to be precise, because “collapse” sounds like a cliff edge. In research, it is usually a gradual degradation observed across generations in controlled experiments. The main signal is that the model’s output distribution becomes narrower. It loses the tails. In practical terms, that can look like less diversity in phrasing, fewer uncommon facts, weaker handling of niche topics, and increased repetition of popular misconceptions.

This is not the same as saying synthetic data is always harmful. Synthetic data can be useful, sometimes extremely useful, when used as a supplement. It can help fill gaps where real data is scarce, expensive, or sensitive. It can provide labelled examples for training, or structured question and answer pairs for instruction tuning. The risk is the feedback loop, where synthetic content becomes a dominant part of the training diet without provenance, without controls, and without enough fresh, high-quality real material to anchor the system.

Nor is model collapse a claim about an inevitable future where all AI becomes useless. It is a warning about what can happen under specific conditions, particularly when synthetic data replaces real data and when filtering and sampling choices amplify errors over time.

How researchers test the idea

The evidence base comes from experiments designed to mimic a synthetic future. Researchers train a model, generate synthetic samples, and then train the next model on a mixture that contains a larger share of synthetic content. They repeat the process. The model is then evaluated on held-out real data to see whether it still generalises to the original distribution.

Across multiple model families, researchers have reported patterns that fit the collapse story. The details vary by domain, but the broad shape is consistent. As the synthetic share rises and fresh real data becomes scarce, quality and diversity tend to degrade. In images, this can appear as mode dropping and distorted representations of less common categories. In text, it can show up as narrowing vocabulary, repetitiveness, and reduced coverage of niche topics.

Researchers also use analytical “toy” models to show the mechanism in a simplified setting. These models make it easier to see why tails vanish first. If a model has small systematic errors, and those errors become part of the training distribution, they can be amplified with each generation. The next model learns the error as if it were a signal. Over time, the distribution shifts.

This is the strongest form of the argument, because it links observed degradation to a plausible mathematical mechanism rather than relying only on anecdote.

The competing view, and why it is plausible

There is, however, a serious counter-argument. Some researchers argue that the most alarming results depend on an assumption that may not hold in practice: replacement. In the harshest experimental setup, each generation’s synthetic data replaces much of the real data. That is a worst-case scenario, and it produces the clearest collapse.

A more realistic setup might be accumulation. Real data does not necessarily disappear. It may persist alongside synthetic material, particularly in private datasets, licensed archives, curated corpora, academic repositories, books, and high-quality domain sources. Under an accumulation model, the claim is that collapse can be avoided or bounded because the original anchor remains present.

This matters because it suggests that model collapse is not a fate, it is an outcome of choices. If labs maintain stable pools of human-originated data, treat synthetic data as augmentation rather than replacement, and manage the mixture carefully, the feedback loop may be kept under control.

There is also a second layer of uncertainty. Some symptoms associated with collapse, especially in text, can be produced by other forces. Aggressive deduplication, filtering, and safety tuning can make models sound generic and repetitive even without heavy synthetic recursion. Separating “collapse” from these other pressures is not trivial.

The honest conclusion is that the mechanism is plausible and supported by experimental evidence, but the severity and inevitability depend on how training pipelines are built and how the web evolves.

What it means for search and information quality

Even if collapse is not inevitable for frontier labs, the web itself can still degrade. Search engines and social platforms have always had to cope with spam, content farms, and low-quality duplication. Generative AI lowers the cost of producing plausible text to near zero. That changes the scale of the problem.

The effect is not simply more falsehood. It is more content that is unoriginal, poorly sourced, and optimised to trigger ranking signals rather than to inform. When the web fills with content that looks like an answer but is not anchored to reporting, documentation, or lived experience, the practical experience of search gets worse. Users compensate by relying more on a few trusted domains, by adding terms like “PDF”, “GitHub”, “paper”, or “site:”. They also shift to platforms that contain signals of authenticity, such as forums with reputational histories, expert communities, and primary source repositories.

Search engines can respond by weighting originality, expertise, citations, and user satisfaction signals. They can also adopt provenance standards and watermark detection. But there is a trade-off. Over-aggressive filtering can demote legitimate content, especially from smaller sites or non-native English writers. Under-filtering allows the sludge to rise.

What it means for misinformation

The second risk is the industrialisation of persuasion. Disinformation campaigns have long relied on volume and repetition. Generative models can produce endless variants, tailored to audiences, local contexts, and trending topics. The result is less a single viral lie and more a background noise of plausibility, which makes it harder for ranking systems, fact-checkers, and readers to spot what matters.

There is also a less discussed risk: error normalisation. If generated content repeats the same misconceptions, and that content becomes part of training data or of widely surfaced search results, the misconception can become entrenched. The system is not “lying” in a deliberate sense. It is amplifying and stabilising a mistake through repetition.

What it means for future model training

For model builders, the key challenge is independence. Training data is valuable partly because it contains independent observations of the world, expressed in diverse ways by many people. Synthetic data is not independent. It is a transformation of existing patterns. If it dominates the pool, the model is learning itself.

This is why there is growing emphasis on curated datasets, licensing, and provenance. It is also why companies are investing in human feedback pipelines and in data collection that captures real interactions and real outcomes. In effect, human-originated data becomes more valuable as the open web becomes less reliable.

Some labs are also exploring approaches that rewrite or “refine” data to make it usable, for example, removing personal identifiers, toxicity, or formatting noise while trying to preserve informational content. This can extend the life of existing corpora, but it raises a philosophical question. If the refinement is done by a model, how much independence remains?

The mitigation toolbox, and its limits

Provenance standards aim to give content a verifiable origin story. If content carries cryptographic metadata that survives normal editing and publishing workflows, platforms and crawlers can make more informed decisions about what to trust and what to include in training. The difficulty is adoption. Provenance only works at scale if major toolmakers and platforms implement it, and if the metadata is preserved rather than stripped.

Watermarking aims to embed a detectable signature in generated content, particularly text and images. Some watermarking schemes are designed to survive light editing. The problem is that text is malleable. Heavy rewriting, translation, or adversarial transformations can reduce watermark detectability. Watermarking can still be useful, especially for flagging high-confidence synthetic content, but it is unlikely to be a universal filter.

For training data, the most robust approach remains curation and mixture control. That means maintaining pools of demonstrably human-originated content; using synthetic data selectively; testing for regressions; and tracking provenance where possible. It also means recognising that the open web is becoming a noisier signal, and that the future of high-quality model training may depend more on structured, licensed, and private sources.

Uncertainty and competing views

There is enough evidence to take model collapse seriously as a risk in uncontrolled feedback loops. There is also enough evidence to say it is not a foregone conclusion. The sharpest disagreements are about assumptions. If synthetic data replaces real data and provenance is absent, degradation is likely. If real data remains present and synthetic data is accumulated and carefully managed, collapse can be avoided or bounded.

The second uncertainty is measurement. We do not have a clean, real-time instrument panel for “how synthetic the web is” or “how contaminated a training corpus is”. Detection tools can be unreliable. Studies can disagree based on methodology, language coverage, and the definition of “AI-written”. That leaves plenty of room for exaggerated claims on both sides.

The prudent stance is to treat information quality as a real-world problem that can be observed already, even if the long-term training implications remain contested.

How to keep your information diet clean

The simplest defensive move is to privilege primary sources. When a claim matters, follow it back to the original paper, dataset, regulatory filing, transcript, code repository, or official statement, then read the context rather than the paraphrase.

The second move is to use triangulation as a habit. Look for two independent confirmations from reputable outlets or institutions. If every result looks like the same wording, or the same structure with different headings, assume it is recycled.

The third move is to treat anonymous, citation-free explainers as low-grade. Good writing can still be wrong, but the absence of sources is a warning sign, particularly for technical or medical claims.

The fourth move is to recognise synthetic “tells” without becoming overconfident. Excessively smooth prose, generic qualifiers, and a lack of concrete detail can be signals, but humans write like that too. Use these cues to trigger verification, not as proof.

The fifth move is to build a shortlist of high-signal domains. Standards bodies, academic venues, reputable labs, and official statistics providers tend to publish material that is easier to verify. Specialist communities, when well moderated, can also be valuable because expertise is visible and contested in public.

The sixth move is to be deliberate about search. Add constraints that pull in primary material, and prefer results that show provenance, authorship, dates, and citations. If a topic is fast-moving, check dates and look for updates or corrections.

The bottom line

As generated content floods the internet, the risk is not a sudden collapse, but a gradual dilution of the web’s informational value. That affects readers first, then search, then the training pipelines that depend on the open internet. The research case for model collapse is strongest in uncontrolled recursion, where synthetic data replaces real data. The best counter-case is that collapse can be avoided through accumulation, curation, and provenance. Either way, the direction of travel is clear. High-quality, verifiable human-originated information is becoming scarcer, more valuable, and more worth defending.