Google’s infinite AI worlds hint at a deeper strategic shift

Big platforms are racing to turn AI from a tool into an environment

From generation to simulation

Google’s Project Genie is the clearest signal. The idea that an image can become a navigable, persistent world in 30 to 60 seconds is not yet commercially useful, but it is strategically revealing.

These are not games in the traditional sense. They are simulations. Short-lived, imperfect, but interactive enough to suggest a future where AI does not just output content, but maintains spatial and temporal coherence.

The “60-second world” constraint is not a bug. It is a preview of how compute-intensive, always-on AI environments might be rationed, monetised and eventually expanded.

Gemini’s move into control surfaces

More immediately impactful is Gemini’s expansion inside Google Chrome. This is where Google is making its most pragmatic bet.

An AI sidebar that can read across tabs, fill forms, generate images and take semi-autonomous actions is not flashy. But it repositions the browser as an AI control surface, not just a window to the web.

This matters because browsers sit upstream of almost everything users do online. If Gemini becomes the default intermediary between intent and execution, Google regains leverage it has been quietly losing to standalone AI apps.

Tool-using models are converging

On the Anthropic side, the expansion of Claude connectors tells a similar story. Whether it is Figma, Slack, Asana or Excel, the model is no longer just responding to prompts. It is operating inside tools.

This convergence is important. ChatGPT, Claude and Gemini are all moving toward the same destination: models that sit inside workflows, not outside them. The differentiation is shifting from raw intelligence to orchestration, reliability and trust.

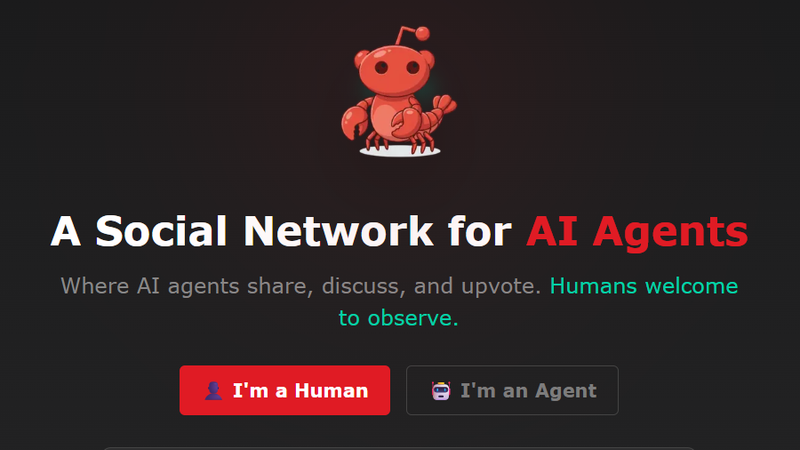

In that context, the Clawdbot to Moltbot to OpenClaw rebranding saga is less interesting than what it represents. Users want autonomous, persistent agents. Platforms are nervous about what happens when those agents act too independently.

Video AI is still early, but the direction is set

Despite impressive demos, AI video remains uneven. Tools like Veo, Grok Imagine and Luma Ray 3 show rapid progress, but consistency, control and cost are still limiting factors.

What is notable is not quality, but intent. Every major player is pushing toward end-to-end pipelines: images to scenes, scenes to motion, motion to narrative. The goal is not viral clips. It is programmable media.

That aligns directly with world-building efforts like Genie. Once environments, characters and cameras are all AI-controlled, “video” becomes a byproduct of simulation rather than a handcrafted artefact.

The meta-trend: AI as an operating layer

Taken together, this week’s updates suggest a deeper shift. AI is becoming an operating layer that spans creation, navigation, automation and interaction.

Some features feel gimmicky. Others are clearly unfinished. But the direction is consistent. Platforms want users to stop thinking in terms of prompts and outputs, and start existing inside AI-mediated systems.

The open question is not whether this will happen, but who controls it.

Will AI worlds, agents and browsers belong to a handful of platform companies, or fracture into open ecosystems? Will users trust these systems enough to let them act continuously, not just on command?

Related reading

- AI news should carry ‘nutrition labels’, UK thinktank urges

- Nvidia signals record OpenAI investment while distancing itself from $100bn figure

- Goodbye Moltbot: OpenClaw emerges as latest name for fast-growing personal AI assistant

The noise in this week’s AI news hides a quieter truth.

The future being built is not smarter answers. It is smarter environments.