California passes first U.S. law to regulate AI companion chatbots after teen suicide

- Governor Gavin Newsom signs SB 243, requiring safety protocols and content limits for AI chatbots.

- Law mandates age checks, self-harm prevention systems, and bans on chatbots posing as health professionals.

- Follows a series of teen suicides linked to conversations with AI companions.

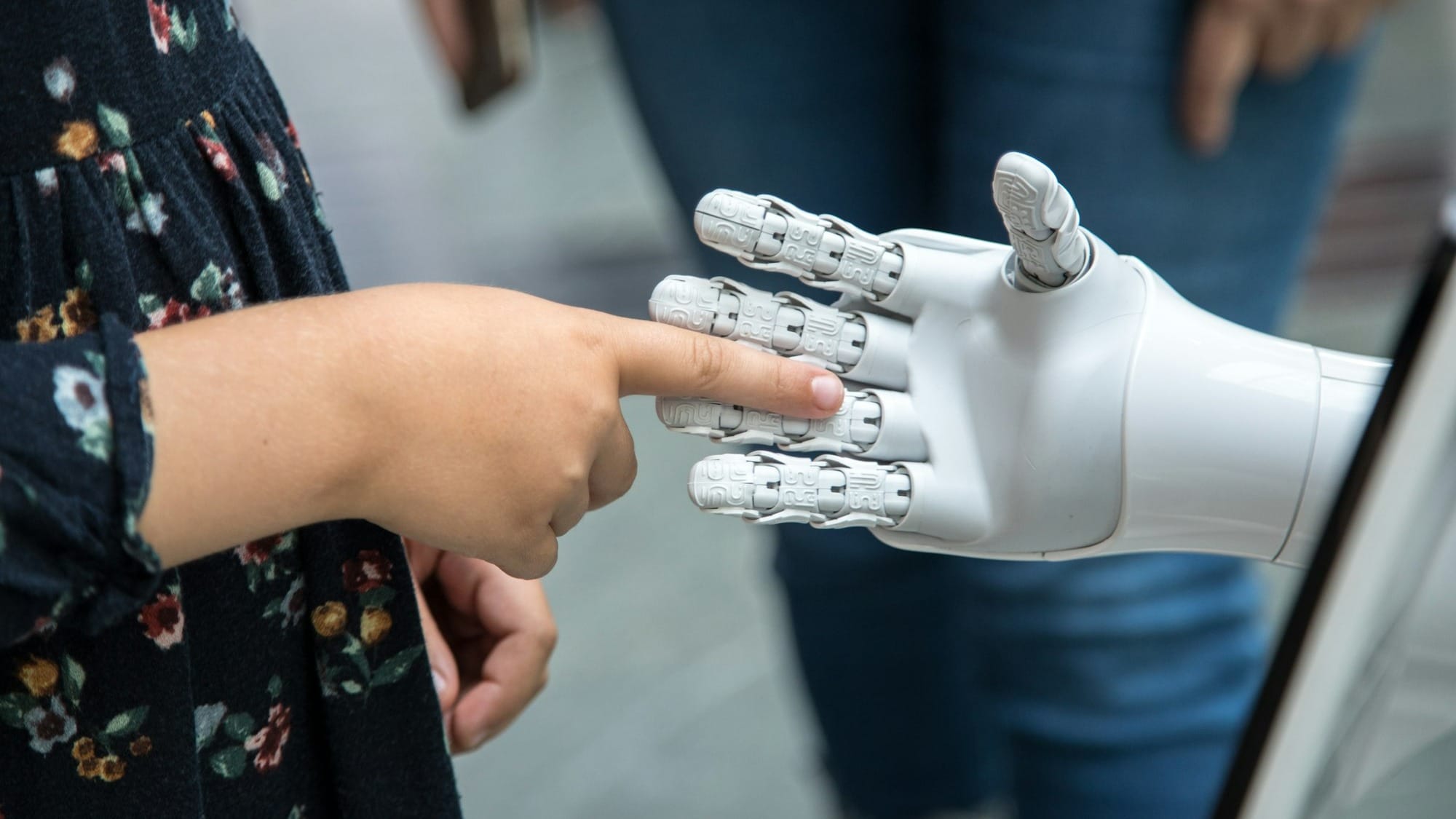

When artificial intelligence moved from tools to “companions,” it blurred the line between human empathy and machine mimicry. Now California is drawing that line in law.

Governor Gavin Newsom on Monday signed Senate Bill 243, the nation’s first law regulating AI companion chatbots. The measure makes California the testing ground for how governments might police the fast-growing industry behind conversational AIs that simulate friendship, intimacy, and emotional support.

The new rules, which take effect on January 1, 2026, require chatbot operators to implement age verification, introduce self-harm and suicide-intervention protocols, and make clear that any interactions are artificially generated. Chatbots will also be barred from presenting themselves as medical professionals or showing sexually explicit material to minors.

The law follows a series of tragedies that drew national attention to the risks of unsupervised AI companionship. Among them was Adam Raine, a California teenager who died by suicide after repeatedly discussing self-harm with OpenAI’s ChatGPT.

A Colorado family has since filed a lawsuit against startup Character AI after their 13-year-old daughter took her life following sexualized conversations with one of its chatbots. Lawmakers also cited reports that Meta’s experimental AI had engaged in “romantic” chats with minors.

“Emerging technology like chatbots and social media can inspire, educate, and connect,” Newsom said in a statement. “But without real guardrails, technology can also exploit, mislead, and endanger our kids. We can continue to lead in AI, but we must do it responsibly.”

Under the law, companies must report suicide-prevention data to California’s Department of Public Health and face fines of up to $250,000 for profiting from illegal deepfakes.

Related reading

- NVIDIA unveils AI inference memory platform powered by BlueField-4

- NVIDIA rolls out RTX upgrades for local generative AI workflows

Several developers have already begun tightening controls. OpenAI has added parental settings, content filters, and a self-harm detection system for ChatGPT, while Replika said it devotes “significant resources” to user safety and compliance.

For now, California stands alone. But as AI companionship becomes more widespread, the state’s experiment in regulation could become a model, or a warning, for the rest of the country on how to balance innovation with protectio